DIY Alternative zu Nibe Modbus Modul - Seite 10

|

|

||

|

Hi, we just cross-posted... First, a small issue... your decodeMessage can be improved and simplified: For decoding 32bit, you differ between message length 80 and other. I think it would be better to distinguish between message type 104 (thats you have length 80) and 106 (the other). Message type 106 always send 4 data bytes one after the other while type 104 uses two 16 bit data fields, therefore, you have to skip the two bytes in between ( usually the second register number is contained). Hmm... this contains start of next message: 92,0,32,238,0,206,92 -> this is type 238. nibegw.c here tries to read up to 200 byte. Serial port delivers everything that is available. If it is too short, they seem to ignore that and continue with the next message (they compare data[4] with length of message). In this case, they do not start to read the next message as part of the old one - this happens in the next read call for the next message. Btw... that's why I never see a message with wrong length - nibegw does not forward them to UDP. Why does your code starts getting the next message at all? There should be a delay in between and the serial read should only return the first message - everything else comes later. Or do you have a timing problem here in a way that the next message comes too short after the corrupted one that you read too much? Other option would be hardware problem. Does this really lead to an alarm? If yes, I would just try with nibegw for a similar amount of time. If this works, then it is software/timing, if it also leads to an alarm, it is a problem with the hardware. I have no idea on the impact of garbage collection on that specific timing issue. node.js does a lot of garbage collection (just monitor its memory usage with "ps aux|grep node") so this might lead to a situation you miss the small delay between the two messages. Maybe another approach for debugging this issue: If you add an option to the backend to read from UDP instead of serial (i.e., an nibegw-adapter), drop ACK/NACK and write to UDP instead of serial (as a replacement object for low level communication), you could try all your logic with nibegw as real-time unit. If this works perfectly, then it is timing in accessing the serial part from node.js. I do the reading part that way (just the relevant parts): var PORT = 9999; var HOST = '192.168.1.2'; // your IP address, likely localhost for local nibegw var dgram = require('dgram'); var server = dgram.createSocket('udp4'); server.bind(PORT, HOST); server.on('message', function(message, remote) { var data=Array.from(message); if (data[3]===104 || data[3]==106){ decodeMessage(data); } }); decodeMessage is very similar to yours... so this is really simple. Greetings, Jan |

||

|

||

|

Lets continue with the forum ping pong... I understood that. My intention was: Among my 25000+ messages there could be one the calculated checksum is really 0. In this case the checksum field should contain 0000. If there is some unknown protocol speciality here, nibegw will drop that. If not, sometimes there should be a message with 0000. If there is no such message out of very many, then there is something we do not know. Not yet  It has verbose mode but I guess that produces too much and not what we are interested in. What are you interested in? So far, I think NACKed messages and too short messages should be logged, right? I can add a few printfs and test this on the afternoon (I do not want to do that remotely in case something breaks). If this gives significant numbers, I will add a counter and maybe just forward counter + messages to UDP, too. If I change the first byte of message to something that differs from 5c the client can then easily log that. Greetings, Jan |

||

|

||

|

Seems enough. And all the checksum errors with raw data. |

||

|

||

|

My knowledge of serial buffers is probably too bad. I thought that the heatpump sends out and I need to catch them as soon as possible? Or is that why it is called buffer? Cause it buffers up waiting to be handled? |

||

|

||

|

Hi, Usually, it works like that: The operating system has some buffer it buffers incoming data. This buffer has some size limit, once that is reached new data is dropped. In our case, this is far beyond what happens here (at least I guess that). A lot of this can be configured... just look into nibegw.c: options.c_cc[VMIN] = 1; // Min character to be read options.c_cc[VTIME] = 1; // Time to wait for data (tenth of seconds) ...and probably more as defined in the appropriate header file. If I read from such device, I specify how much I want to read. nibegw.c assumes 200. If there is less than 200, I get everything that is there (that is the standard case). As the next message arrives later, I will not get it. If I read much too late (after next message started), I obviously not only get the current message but also parts of the next one. This seemed to happen in your example. I can image that node.js did some garbage collection or whatever and was too busy to read on time. One solution would be to see everything you get as a stream so you can define a message begins. In this case, you might miss the chance to ACK the first one but you get the second one correctly. I guess that a single missed ACK is no problem but I'm not sure with respect to alarm. The other solution is the one you don't want... use nibegw as low level serial reader and connect nipepi using UDP. This can also be done locally - then I would give nibegw a very high priority (nice -n -20 nibegw). This very simple C program does not do any garbage collection or anything else so it should be much better from timing perspective. My system now runs for more then 50 hours with no alarm at all. In this case, your node.js application will get its data as UPD packets. The way nibegw sends them it is ensured that every packet is exactly one message. So with one read (code above) you get exactly one message. Everything else is blocked/filtered already in nibegw. Logging in nibegw: I will start with error printfs. If there are many errors, I will add UDP logging for that. If not, we know it runs stable. Greetings, Jan |

||

|

||

|

Hi, checksum 0000 is possible: 2019-12-03;13:50:17 5c00206a060caded0202000000 13 13 ok Now I add failure output into nibegw.c Greetings, Jan |

||

|

||

|

Hi, first results. I was incorrect with respect to the function of nibegw and the way it reads the serial port. It works as follows (from the code): It reads as much as possible from serial port into a buffer. Then, it scans this buffer for 0x5c to find start of message. If it finds that, it copies byte for byte into an array called message. For each byte (!), it calls checkMessage. Now, there are four cases: Invalid message... length is two or more and it does not start with 0x5c 0x00. This will return -1 and the main loop breaks in order to scan for next start. Message not yet finished: This is the case if message[4] >current length of message + 6. In this case, 0 is returned and the next byte is copied etc. to scan over the message. Message is long enough... now the checksum is calculated. If it is wrong, -2 is returned. Otherwise, length is returned. The mainloop now sends NACK (in case of checksum error) or processes the message (ACK, etc.) in case of correct message. (or scans for a new start if the message was invalid) Furthermore, it breaks in case the message becomes too long for the array but this can only happen if message[4] indicates a message longer 200 byte (this can only happen in case of failure because all known messages are shorter). So, with respect to messages, they only distinguish FOUR cases: - perfect message -> continue - wrong checksum -> NACK - invalid start -> search for new start field - too long message (due to corrupted length field) -> search for new start field. Especially, the case "too short message" can not occur because they always consider message[4] bytes (+ header + checksum). If the message really was too short (if a byte was missed), this will result in a checksum error as they interpret part of the next message as checksum (to fill up the message to its specified length). The next message will be destroyed that way so overall this might result in two missed ACK/NACK. This way, the incoming stream is continously scanned and sorted out into messages. Therefore, I added the three error cases "invalid", "wrong checksum" and "too long message" as printf. So far, nothing of those occured. Therefore, lets see if this happens. Message output is not yet added because I first want to see if it happens at all. I will report later. I'm not completely sure about your code but somehow you seem to miss data here because you get too short messages. If you want, I can give you the changed nibegw.c in order to check if your hardware is the problem (but my extension is really simple... just three printf). Another finding: Missing some ACKs does not matter. I was too lazy to disable Modbus for changing and restarting nibegw, therefore I just stopped nibegw by Ctrl-C, compiled the new version and started it again. This took 1..2 seconds and did not produce an alarm. They same happened yesterday when I stopped nibepi and started nibegw again. Greetings, Jan |

||

|

||

|

I'll send you a PM and I like to test your modify code. I have the same view of how nibegw checks the message and I have tried to replicate that. But I dont loop through every byte. Some people say it's slow so I do a findIndex which returns 5C is found. Then I concat to get the whole message. If I look at the raw data when I get a short message it's also missing there. |

||

|

||

|

Hi, I just sent it to you together with a simple UDP receiver. Then it looks like you do not get the missing bytes at all because they get dropped by the hardware or node.js serial handling. Therefore, it is really the best approach to test my modified nibegw. If it prints out something, than your hardware behaves different from mine (faulty?). Here, it runs since 150 minutes without any error. It sends the favorites to my UDP<->MQTT translator and my logging client additionally requests one register per 20 seconds (thats incoming UDP from the perspective of nibegw). If you get errors, then try to run nibegw isolated with nothing else on that pi. Since it sends UDP ("fire and forget"), it does not care if nobody is listening. If you want to have some js code in parallel (such as the simple UDP receiver), I would start nibegw with nice value -20. Since your Pi Zero is single core, you cannot use core isolation as I do on my quad core Pi. If you want to test UDP as part of nibepi, I can provide you with the sending part also (which is similar simple as the receiving side I already send you). Greetings, Jan |

||

|

||

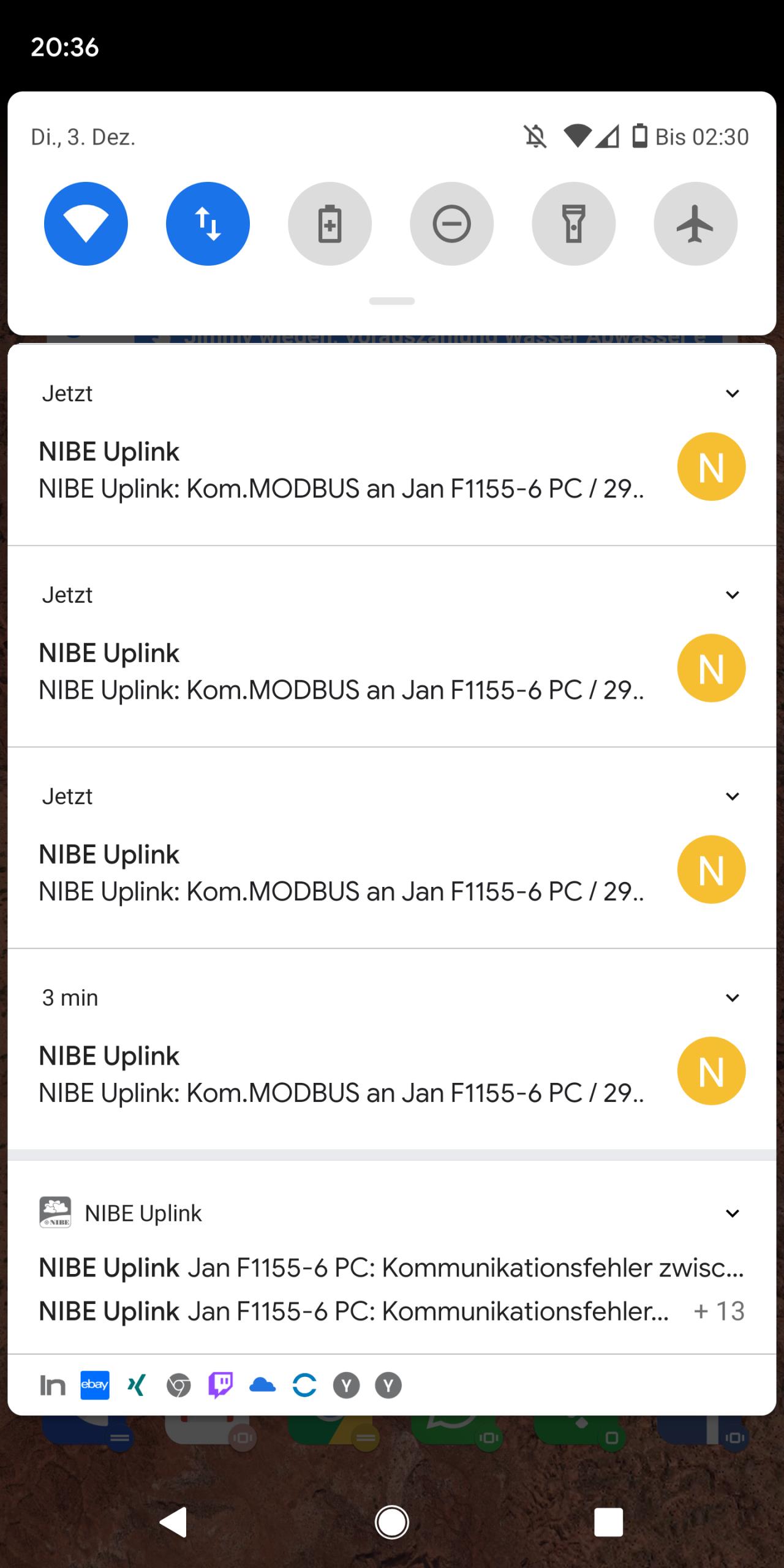

Haha  Das war mal ne Flut an Kommunikations Fehlern. |

||

|

||

|

Hi, ja... ich habe den Uplink wieder drangesteckt und da kam alles, was so aufgelaufen ist während der Testerei. Jetzt dürfte aber Ruhe sein, denn nun läuft Nibegw+UDP<->MQTT sehr stabil und ohne jedes Problem. Seit Sonntag logge ich damit ganz regulär 41 Werte pro Minute und erfreue mich an der Echtzeit-AZ: Spreizung: 2.6 P_th: 2685 W P_ko: 402.8 W P_pu: 29.3 P_el 432.1 AZ: 6.214 Viele Grüße, Jan |

||

|

||

|

Perfekt. 👍 |

||

|

||

|

Without knowing the code of findIndex(), I bet that it loops over every byte to find the desired byte. Because that is the only way how this can be done  Processing single bytes is a bad idea, if they are read byte-wise from a file, or a network socket or any other peripheral. But if you operate on data in the memory it is not slow at all. However, that is a common misconception. Processing single bytes is a bad idea, if they are read byte-wise from a file, or a network socket or any other peripheral. But if you operate on data in the memory it is not slow at all. However, that is a common misconception. So you start at a "5c", then search for the next "5c" in the buffer and - if found - interpret everything in between as a message or if not found, you bail out somehow and discard the bytes? If that is the case, a byte error may put your parser "out of sync". Imagine when a "5c" gets corrupted, or another byte turns into a "5c". This may be the cause of some of the problems you described above. I think the approach that Jan described (i.e., checking every byte) is more robust against byte errors. I start to believe that - at least for now - this is the best option. NibeGW is super robust, since the guys already solved all the protocol/parsing issues we are discussing here. Would also free some development ressources that could be used to improve other aspects of NibePi. |

||

|

||

|

https://stackoverflow.com/questions/55246694/performance-findindex-vs-array-prototype-map It's a faster way to find at which index the start byte "5C" is. Im doing what nibegw is doing, i look for the start byte then i look into the next bytes to get the destination and type and then the message length, then I read the bytes according to the length, just as nibegw. If I find a 5C or a double 5C I dont care about that, either its a double byte or a new start, if it is a new start something is wrong and I did not ACK in time. The ack need to come before the new start. It seems like I have around 108 ms from the last byte in the buffer to the next start. NibeGW seems super robust, but since it dosent log every error maybe the errors I am seeing in my backend also occurs in Nibegw but never gets showed. |

||

|

||

|

Hi, No, he does not. He tries to read as much as there is specified in the length field. That is equal to the behavior of nibegw. However, he loses bytes and therefore reads into the next message. If there is really a message with missing bytes, nibegw would do exactly the same. However, so far this never happened since I started logging at that level. NibePi: Have you checked the code line I mentioned in the mail I sent you? Maybe the problem sits there... Not in nibegw. They only deal with the basics in nibegw, the rest is done at the receiving end of the UDP communication. I will go through their Java program to figure out if we missed something. So far, it seems we have everything (I already briefly scanned their code) but I will recheck. Thats why I added the logging output. At the three code positions we catch all relevant cases that might occur. My system is running with the patched version for more than 4 hours at the moment. There was not a single fault. Obviously, I let that running. By the way, in order to make the conditions worse, I reduced CPU speed to 600 Mhz, reactivated Uplink and log to USB in parallel. So far... no effect on nibegw. I have no idea how much time we can loose if garbage collection or some other dynamic mechanism of node.js kicks in. Maybe there are some options to make this more predictable. As I said before: Before investigating further, it has to be absolutely sure that the hardware of nibepi's system is not the cause. Therefore, run the modified nibegw for some hours and see what happens. My added "logging" should catch these cases... so if it also happens with nibegw, it is most likely hardware related (because here it runs without any printout). If not, then it makes sense to investigate further. Greetings, Jan |

||

|

||

|

Sry. that line is just an old function to do some error handling which is not needed anymore because of the new findings here. Are you sure your printf works? :D Im gonna run nibegw during the night to see what happens. In the meantime im trying new ways to analyze the serial buffers. |

||

|

||

|

Hi, Just look at the code... "checkMessage" has some possible results and we catch them all (except the positive case we will get the message on UDP). Furthermore, the case "too long" is catched in line 593 because this happens before checkMessage. If you are unsure about that, just look at line 609: case 0: // Message ok so far, but not ready break; Thats the case our message is not yet ready, so we found a new byte but it is not the last one. Add a printf here (between "case 0:" and "break;") and you will see hunderts of outputs - one for each byte it scans. For everybody else: The line numbers above a valid for the patched version, not the original one. With respect to my experiments: nibegw now runs for 14 hours and no output at all. Additionally, there was no alarm at all since I switched to the UDP solution (except the ones I created during testing when there was nothing that ACKed). With respect to your "too short" messages: Can you check if the affected messages are always "longer" messages (containing 5c5c)? For the examples you posted that is true but I guess you have much more. If yes, the problem seems to be related to that. Greetings, Jan |

||

|

||

|

Hi, just a short report: My heat pump just finished with hotwater. No alarms, no messages from nibegw. However, as always at the beginning and end of hw, there was a large amount of messages that needed to be de-escaped (messages containing 0x5c5c). Greetings, Jan |

||

|

||

|

Sounds good. So what is your current setup? So you use nibegw that communicates via a (local) UDP socket with a custom JS? This script implements some functions from the openHAB binding (like parsing the registers) and NibePi to send this data to a (remote) MQTT broker? |

||

|

||

|

Hi, Not exactly. I do not use NibePi at all (only the basic idea and some elements). And I also do not use anything from Openhab except NibeGW. I run nibegw on a Pi with core isolation and nice value -20. This nibegw is unchanged except that it now contains code to print errors (never happened so far). It sends UDP to my linux server (the usual packets that are usually send to Openhab). On the server, I have a JS file that listens on UDP. It gets messages and parses them. This parser is similar to decoding part of Nibepi but simplified because it can assume that the message is valid and already acked by NibeGW (and real-time is also no issue). It uses Nibepis .json to get register description and the factors. This parser outputs the register values to MQTT as soon as the register is decoded (the topic names are equal to the ones NibePi uses). On the other side, it subscribes to MQTT in order to get the "get" and "set" requests (again same topics as in 1.1 NibePi). These are parsed and translated into read/write request packets (again similar to nibepi). These packets are then send by UDP to the port 10001 and 10000 of the NibeGW on the Pi - this is the NibeGW interface for write and read request. Timing etc. is handled by nibegw in order to send read request as soon as there was a read token and send write request after write token. With respect to favorites, get and set registers, I'm compatible to the MQTT topics of NibePi. I read only 0x68 and 0x6a packets (favorites and read answer) and I assume F1155 as given (NibePi evaluates the identification string and sets heat pump automatically in order to decode the registers correctly). Furthermore, I have no management of registers in a way that I can request them periodically as NibePi does - this has to be requested by the MQTT client using get. And I do not respect factors while writing and and I do not check ranges (this will be added for safety reasons). At the end, it is a much simpler implementation of the NibePi idea reduced to publishing favorites and registers that were requested before. Additionally, writing is supported as in NibePi without safety and comfort features. For me, this works very well. As the interface is equal, it is a drop in replacement for NibePi and vice versa. For testing NibePi yesterday, I only stopped NibeGW and started NibePi. Overall, I consider this an UDP<->MQTT translator without all the additional functionality that NibePi offers (especially the controlling part and the "everything in one block" approach that greatly improves user friendliness). Therefore, it does not make much sense to develop this translator into an alternative to NibePi as "yet another solution". It would make much more sense to add an optional UDP interface to NibePi - then it would have the same functionality plus everything else it offers. Greetings, Jan |

||

|

||

|

The affected messages were always 81 bytes long and included 5c5c. I found the problem yesterday. Line 107 in backend.js let index = data.findIndex(index => index == 92); if(index!=-1) { data = data.slice(index, data.length); fs_start = true; } I should have blocked this with if(fs_start===false)... If a new 5C is found then it will slice all the data before that and then concat the buffers. Thats why I'm getting shorts. I rebuilded the backend to handle the buffer directly (instead of converting it to array) And added the if(fs_start===false). Now I have 0 errors during the last 18 hours. I believe the problems of NibePi are solved :D I'm gonna run some more testing but it looks really promising now. Could'nt have done this without your help JanRi and the others who contributed. Now NibePi handles all the communication with the pump directly and holds all the registers for every pump in the F series and automatically discovers them. When all the communication troubles now hopefully are solved I can move on to make the NodeRED nodes complete. |

Beitrag schreiben / Werbung ausblenden?

Einloggen

Einloggen

Kostenlos registrieren [Mehr Infos]

Kostenlos registrieren [Mehr Infos]